Introduction: A Quiet Revolution Behind Every Lens

In the era of digital transformation, a silent revolution is reshaping how we perceive the role of cameras. No longer limited to passive recording, the IoT camera has evolved into an intelligent edge device capable of sensing, analyzing, and even making autonomous decisions. This shift transcends a mere technological upgrade—it signifies a fundamental restructuring of the global value chain, transforming cameras into the “neural infrastructure” of the connected digital world.

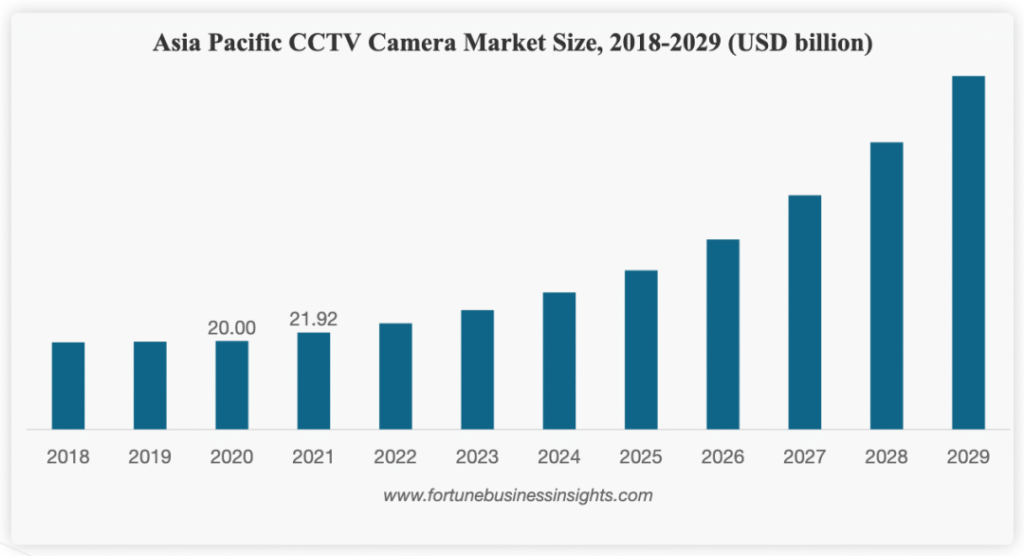

The traditional surveillance era is drawing to a close. According to Fortune Business 洞察力, the global video surveillance market is expected to reach USD 1 trillion by 2029, growing at a CAGR of 16.8%. Within that, the AI-powered video analytics segment is expanding even faster—over 30% annually.

This rapid acceleration signals a fundamental shift: cameras are no longer built just to “see,” but to “understand.” As AI and IoT technologies converge, a new class of intelligent visual sensors is emerging—devices that not only observe but also interpret and act.

目录

The Six Generations of Camera Evolution

The journey from analog recorders to intelligent vision systems mirrors the broader evolution of technology: from passive to proactive, from isolated to interconnected, and from reactive to predictive.

- The Passive Recording Era

Early CCTV systems served as basic recorders. They stored footage on magnetic tapes or hard drives and required manual review. Then, the process was slow, reactive, and purely forensic—valuable only after incidents had already occurred.

- The Digital and Networked Era

The arrival of IP cameras and digital video recorders marked the first major leap. Remote access and higher storage efficiency improved monitoring convenience, but human operators still played the central role.

- The Dawn of Basic Intelligence

The introduction of motion detection and simple video analytics brought cameras into the early stages of automation. Further more, they could now identify changes in scenes and trigger alerts—but often with high false alarm rates and limited accuracy.

- The Edge Intelligence Revolution

With AI chips and edge computing maturing, cameras gained on-device inference capabilities. In addition, features like facial recognition, license plate reading, and behavior analysis could now be executed locally in real time. According to Nature Scientific Reports, such systems achieve sub-second response times and over 95% accuracy, transforming cameras from observers into analytical assistants.

- The Multi-Modal Integration Phase

Modern cameras integrate multiple sensing modalities—combining visual, audio, environmental, and biometric data. This fusion enhances situational awareness and context understanding, allowing cameras to perceive the world as a cohesive system rather than isolated signals.

- The Age of Predictive Intelligence

The next frontier lies in fully autonomous vision systems. Powered by deep learning and reinforcement learning, these cameras will predict potential events and act independently. They represent a leap from reactive monitoring to predictive decision-making.

Over six generations, performance indicators have improved exponentially—latency reduced from hours to milliseconds, detection accuracy surpassed human levels, and computational capacity scaled from single streams to thousands of simultaneous inputs.

From Silos to Ecosystems: The Architectural Revolution

Yesterday’s surveillance systems were isolated silos—each camera operated independently, storing data locally and offering limited long-term value. Today, intelligent visual networks are reshaping that landscape into interconnected ecosystems.

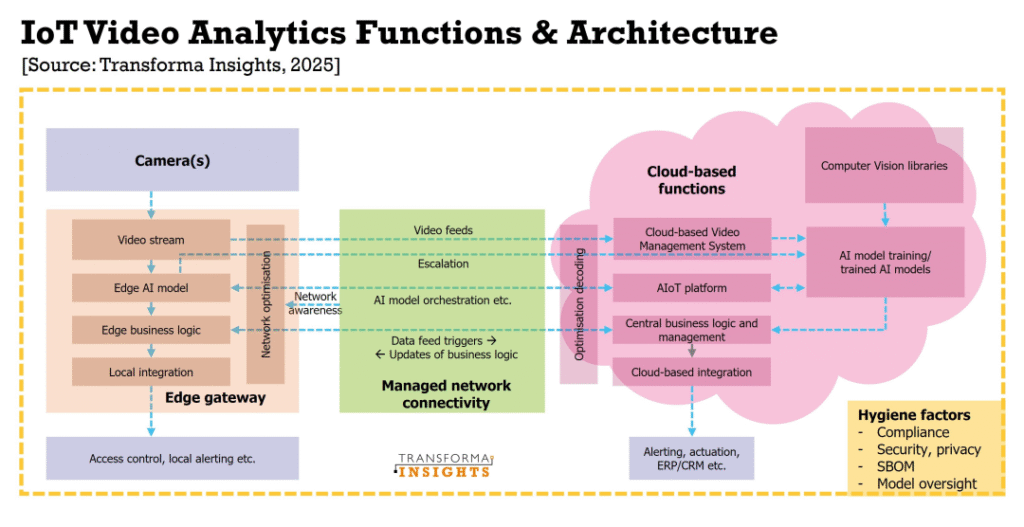

Edge-Cloud Collaboration

The hybrid edge–cloud model has become the backbone of modern architectures. Research shows that such systems can maintain 99.8% uptime and sub-500ms latency by distributing workloads intelligently.

- At the edge, AI-enabled cameras perform object detection, behavior recognition, and anomaly detection in real time—reducing bandwidth needs and ensuring instant responses.

- In the cloud, aggregated data from thousands of edge devices enables cross-regional analysis, long-term optimization, and continuous AI model training.

Multi-Modal Data Fusion

Vision systems now integrate visual, acoustic, and environmental sensors into unified intelligence frameworks. For instance, in fire detection, modern systems combine thermal imaging, smoke detection, sound analysis, and gas sensing to minimize false alarms—reducing error rates by over 80%, according to field studies.

Resilience and Scalability

Maintaining reliability in complex deployments is critical. Multi-layer redundancy, multi-network protocols (5G, Wi-Fi, LoRaWAN), and microservices architectures ensure stability even under failure. Containerization technologies like Docker and Kubernetes enable automatic scaling, fault recovery, and high availability.

Data Governance and System Evolution

With data volumes growing exponentially, intelligent vision networks rely on tiered storage and data lake architectures to manage real-time and historical data efficiently. Edge devices continually receive model updates from the cloud—creating a self-evolving, adaptive intelligence ecosystem.

This shift from isolated systems to open ecosystems is not merely architectural—it’s conceptual. It embodies the transition from hardware-centric 至 data-driven and service-oriented intelligence.

Beyond Surveillance: The Expanding Value of Vision

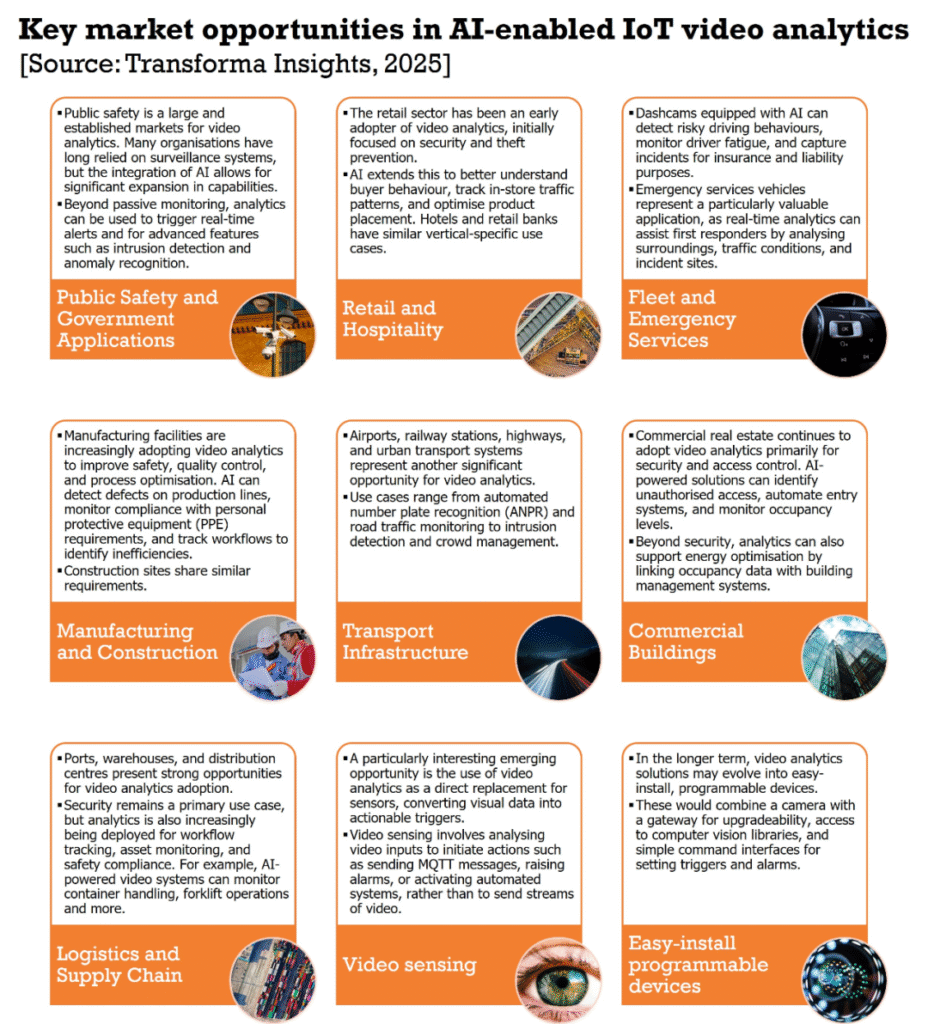

The true potential of intelligent vision systems extends far beyond surveillance. According to Transforma Insights, the fusion of AI and IoT is unlocking entirely new layers of value across multiple industries.

- Public Safety: From Reaction to Prevention

现代 AI-powered IoT systems can detect anomalies and issue alerts within half a second, enabling real-time incident response. More importantly, predictive analytics can identify potential risks—such as crowd surges or traffic congestion—before they escalate, reshaping public safety management from reactive control to proactive prevention.

- Smart Manufacturing: Vision as a Quality Engine

In industrial environments, IoT camera now serve as key components of process control. They not only detect microscopic defects, optimize workflows, but also forecast equipment failures through continuous visual monitoring. This reduces downtime, enhances yield, and drives precision manufacturing.

- Retail and Logistics: Data-Driven Efficiency

Smart IoT camera analyze foot traffic, dwell time, and customer behavior, enabling retailers to optimize layouts and inventory management. What’s more, in logistics, vision systems power real-time visibility, from automated sorting and tracking to unmanned delivery via autonomous vehicles and drones.

- The Data Economy: Vision as a Digital Asset

Video data has become a valuable economic resource. When analyzed with AI, it reveals insights for urban planning, real estate assessment, insurance risk analysis, and more. Each frame transforms into actionable intelligence, empowering better decisions across industries.

The Road Ahead: Vision Networks as Digital Infrastructure

Standing at the intersection of AI, IoT, and next-generation networks, Vision Networks (VisNets) are poised to become the neural system of digital civilization—connecting the physical and virtual worlds in real time.

Integration with the Metaverse

In the emerging metaverse, AI-powered IoT camera enable real-time 3D digital twins of physical spaces. Urban planners could simulate city layouts, traffic flows, or crowd behaviors in a virtual twin before implementing changes in reality—reducing costs and improving decision accuracy.

The 6G Horizon

With 6G promising terabit-per-second speeds and microsecond-level latency, the barriers of bandwidth and delay will vanish. Global, space–air–ground–sea connectivity will make it possible to build a planetary-scale vision network, capable of monitoring environmental shifts, tracking wildlife, and forecasting natural disasters.

Quantum and Bio-Inspired Intelligence

Quantum computing will exponentially accelerate video analysis and pattern recognition, while bio-inspired designs—mimicking the eagle’s precision or insect compound eyes—will expand perception into extreme environments like deep oceans or outer space.

Human–Machine Integration

Advancements in brain–computer interfaces (BCI) will blur the line between human cognition and visual AI. Security officers could control visual feeds through thought, surgeons could guide robotic cameras during complex procedures, and visually impaired users might “see” through connected vision devices.

A New Epoch of Intelligent Vision

From the earliest image-capturing devices to today’s autonomous vision systems, IoT camera have undergone a profound transformation—technological, industrial, and philosophical.

Through the convergence of 人工智能和物联网, cameras have evolved from passive observers into intelligent agents capable of perceiving meaning, anticipating change, and making decisions. So their role now extends beyond monitoring—they are the perceptive organs of our digital society.

Yet challenges remain: ensuring data privacy, creating sustainable business models, and aligning technological power with ethical frameworks. The future of intelligent vision depends not only on technological innovation but also on collaboration among industries, policymakers, and communities.

As we move toward an age powered by 6G, quantum computing, and AGI, vision systems will not just help us see the world—they will help us understand, predict, and shape it.